T4T LAB 2019 Texas A&M University. Invited Professor: Joris Putteneers.

Team: Nicholas Houser, James Hardt, Nathan Gonzalez, Daniel Wang.

Territory

Our project is discussed in terms of simulation software and

algorithmic processing of interpretations of object as data across several

contextual territories. The fluctuation and ability to transcend across a

series of mediums: raw, cooked, and synthetic, is the Queer Object.

The queer object references contextual territory through a

series of pointing but never defining through the Derridean notion of indifferance.

The algorithm interfacing within itself, shows the notion of indifferance,

moments where the algorithm does not necessarily decide what to do but rather

just does. The algorithm works the same way in establishing its understanding

through a series of interfacing with contextual objects

The first relationship is territorial, this is an

epistemological quest by looking for, pointing at, through the notion of

artifacting across the raw, cooked ,and synthetic. The algorithm operates as

the smooth territory itself, where the contextual territory operates as the

vehicle for striation. The algorithm is not bound to a territory but places

itself through a series of referential objects that are able to be artifacted

from the contextual territory.

The algorithm can exist in all forms of territory because it

is always pointing at but never actually defines these moments. The territory

is defined the moment the context is referenced and artifacted.

The act of indifference and vicarious causation of

referenced objects results in the contextual territory to become the vehicle of

striation.

Raw, Cooked, Synthetic

Each territory represents the nature of the Raw, Cooked, and

Synthetic. The artifacts from each territory are results of incomplete

understandings of contextual objects due to inddiferance.

The Raw is a proto territory that yields an ancestral

algorithm. The artifacting process, since the algorithm interprets numerical

data, consist of a series of photogrammetry of objects found within the proto

environment, that are then translated into a cartesian coordinate system. The

data was then ran through the scripting of the algorithm itself to be in its

own image.

The Cooked is a territory that contains a series of objects

created by CAD. This denotes ideas of construction, technicality, and complete

control of Euclidean geometry. These objects are easier for the algorithm to

synthesize because of the similar language of numerical data, thus causing an

overlap in data and resulting in the kitbashing of referenced objects. The data

was then run through the algorithm to put it into its own image.

The Synthetic is a hyper real territory. The hyper real is a

system of software simulation simulating itself. This territory only exist within the digital

environment. The objects that exist within this territory are produced digitally

and are only meant to act in the digital realm. The

artifacts created, since the algorithm does not care to observe every data

point of the object, are the results of the jumbling of data points and

creation of simulated simulation. The hyperreality is the terminal stage of

simulation.

Maturity & Queerness

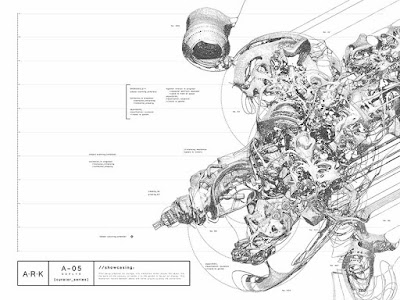

Since the algorithm is in a constant state of flux, both in

posture and territoriality, maturity and senescence can be derived. The

duration allows for the algorithm to continue the addition of artifacts.

Due to the constant act of referenced objects and striation

of the algorithm through such, the queerness emerges. The position of queerness

can be seen in the vector density drawing, section cuts and geometrical

resolution. As the algorithm continues its maturation, the flux can be seen in

a change of density of the framework of the vector trails. The vector trails

are the foundation of the generative nature in the workflow project, as the overlap

of trails unravel, the substance that can be territorialized by the artifacts

is depleted.

The resolution of the geometrical framework is also at the

will of the maturation of the algorithm. Areas can now be identified through

resolution of artifact versus algorithm other than just in terms of geometrical

relations but rather geometrical resolution and subdivision.

The flux of artifacts begins to divide and disassemble the

area in which the algorithm can operate. The addition of these artifacts

creates new spaces while divides others. Through the senescence of the

algorithm and addition of artifacts, the posture of the algorithm changes as it

matures. Upon referencing the contextual territory under constant acts of

striation the queer object is exhausted. The duration of the algorithm can be

seen through the decay and dismemberment of the original algorithm and growth

of invasive artifacts. The duration does not compromise the substance of the

object because it is an issue of kind and not to degree at which the posture is

lost.

Simulation

The algorithm does not need to exist in our current reality,

rather we are laying the frame work for the next reality in which this will

occupy, The architecture of the hyperreal, because what is the difference from

the architecture of the a priori world of our reality.

The Hyperreal is the terminal stage of the simulation,

because the hyper real is indistinguishable from reality, and thus the process

is repeated from the raw to the cooked to the synthetic, recursively.

Conclusion

The algorithmic

exhaustion, theory, and queerness are all connected through the results of

simulation software. The essence of simulation is queer. The aspects of flux

that are produced in terms of fluidity change and indefinability relates

exactly back to queer theory.

The workflow of this project showcases the view of machine

vision and simulation software being applied to all principles of territory,

section, elevation, program and aesthetics. They are all adaptive to different Variables

that can alter both the simulation program and the algorithm which ultimately

leads to the absolute exhaustion of workflow ending the life of the queer

object and simulation.